Apple Child Safety update will scan photos for abusive material, warn parents

Apple tree has announced a raft of new measures, aimed at keeping children safe on its platform and limiting the spread of child sexual abuse images.

Likewise as new safety tools in iMessage, Siri and Search, Apple is planning to scan users iCloud uploads for Child Sexual Abuse Fabric (CSAM). That's certain to be controversial among privacy advocates, even if the ends can justify the ways.

The visitor is planning on-device scanning of images that will take place earlier the photo is uploaded to the cloud. It'll be checked against known 'epitome hashes' that tin can defect offending content. Apple tree says this volition ensure the privacy of every twenty-four hours users will be protected.

Should the tech discover CSAM images, the iCloud business relationship in question volition be frozen and the images will be reported to the National Center for Missing and Exploited Children (NCMEC), which can so be referred to police force enforcement agencies.

"Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes," Apple writes in an explainer.

"This matching process is powered by a cryptographic technology called individual set intersection, which determines if at that place is a lucifer without revealing the outcome. The device creates a cryptographic safety voucher that encodes the match result forth with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the paradigm."

Yous might similar…

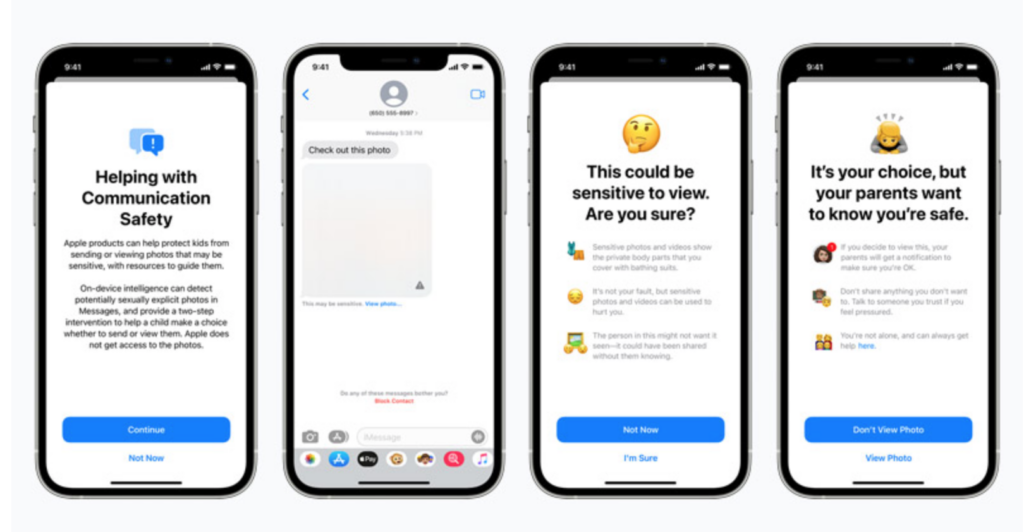

Elsewhere, the new iMessage tools are designed to go on children safe from online exploitation. If a child receives what become-betwixt image-detecting tech deems to be inappropriate it volition exist blurred and the child volition be warned and "presented with helpful resources, and reassured information technology is okay if they do not want to view this photo."

Depending on the parental settings, parents will exist informed if the kid goes ahead and views the image. "Like protections are available if a child attempts to transport sexually explicit photos," Apple says. "The child will exist warned before the photo is sent, and the parents can receive a message if the kid chooses to send it." Again, on-device image detection tech is used.

Finally, new guidance in Siri and Search will provide iPhone and iPad owners with staying safe online and filing reports with the relevant authorities.

The company adds: "Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that involvement in this topic is harmful and problematic, and provide resource from partners to get help with this upshot."

These updates are coming in iOS/iPadOS 15.

Source: https://www.trustedreviews.com/news/apple-child-safety-update-will-scan-photos-for-abusive-material-warn-parents-4156295

Posted by: brewerhistat.blogspot.com

0 Response to "Apple Child Safety update will scan photos for abusive material, warn parents"

Post a Comment